Traditionally the term Intelligent Personal Assistant is associated with Siri, Google Now or Cortana and voice based communication. For me, this term means something different – extendable open modular platform doing useful things for the user with user privacy at the centre. I have been experimenting with various ways to build something like this for the last few years in my spare time. I consider it as a research hobby, although I would love to see some Personal Assistant Platforms in existence and usage. In this post I would like share some ideas and observations.

My approach is more close to the approach used in platforms which became quite popular in resent years because of the ChatOps movement. The idea is to use chat-based user interface and “bots” which listen for patterns and commands in chat rooms and react on messages using coded “skills”. ChatOps solutions typically concentrate on coordination of human activities and task automation with bots. The same ideas (and software) can be used for building Personal Assistants. I have been interested in exploring core assistant architecture so I decided (after trying few different bot frameworks) to use JASON [1]. JASON is not a typical bot platform, it is a framework for building multi-agent systems. JASON is written in Java but agent behaviours and declarative knowledge are coded in AgentSpeak [2] – high level language with some similarity to Prolog.

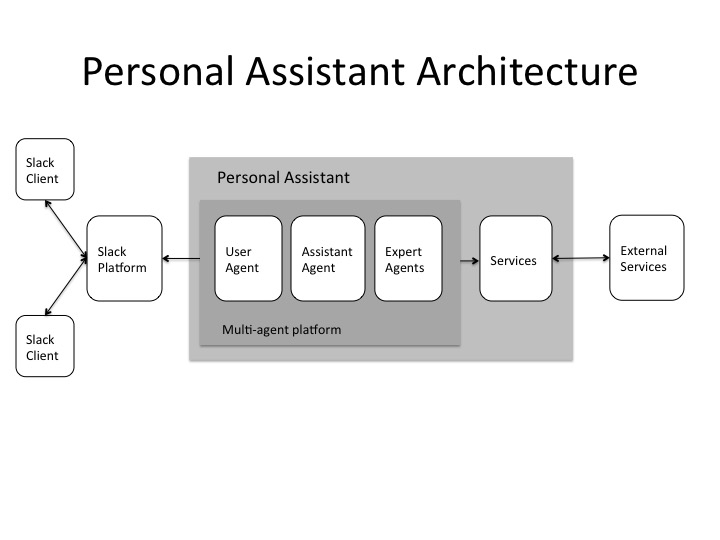

Current architecture is presented below.

I use Slack as a chat platform. Slack provides user apps for various devices, it allows messages with rich content and has APIs for bot integration. There are couple variations of API, but the most attractive from the Personal Assistant perspective is the WebSocket based Realtime API. With this API, bot (assistant) can run in a cloud, on an appliance at your home, or just on your desktop computer (if you still have one). Slack group capabilities are also helpful, it is possible to communicate with various versions and types of the assistants. I use node.js-based gateway to decouple Slack and JASON based infrastructure. I also use Redis as a simple messaging medium. There is nothing special about node.js and Redis in this architecture except that both are easy to work with for implementing “plumbing”. JASON AgentSpeak does not have capabilities to talk to external services directly but it can be extended through Java components. I coded basic integration with Redis pubsub inside of my JASON solution, and for advanced scenarios (when I need services with lots of plumbing) I use node.js or other service frameworks.

Personal Assistant is implemented as a multi-agent system with additional components in node.js (and other frameworks as needed) communicating through Redis. At the centre of the multi-agent system is the Assistant Agent, User Agent and various Expert agents. User Agent is responsible for communicating with the user, Assistant agent plays the role of the coordinator and Expert agents implement specific skills. Expert agents can delegate low level “plumbing” details to services. The system is quite concurrent and runs well on multicore computers. JASON allows also to run multi-agent system on several computers but this is outside of my current experiments. Agents can create other agents, can use direct message based communication (sync and async) or pubsub based message broadcasting. Agents have local storage implemented as prolog-like associative term memory, logical rules and scripts. AgentSpeak follows BDI (Belief–Desire–Intention) software model[3] which allows to code quite sophisticated behaviours in a compact way. Prior to JASON I tried to implement the same agents with traditional software stacks such as node.js, Akka Actors. I also looked quickly at Elixir, Azure Actors. It is all good, it just takes more time and lines of code to get to the essence of interesting behaviours. I actually tried JASON earlier but made a mistake. I started with implementing Personal Assistant as a “very smart” singleton agent. Bad idea! Currently JASON-based assistant is a multi-agent system with many very specialized “experts”.

Let’s look at the “Greeting” expert agent, for example. This agent can send variations of the “Hi” message to the user and will wait for greeting response (for some time). The same agent listens for variations of the “Hi” message from the user. Greeting agent implements bi-directional communication with the user with mixed initiative. It means that the agent can initiate greeting and wait for the user response or it can respond to the user greeting. In addition, greeting agent reacts on user on-line presence change, remembers last greeting exchange. After successful greeting exchange it broadcasts “successful greeting” message which can initiate additional micro conversations.

Reactivity, autonomy, and expertise are the primary properties of the Assistant multi-agent system. Various expert agents run in parallel, they have goals, react on events, develop and execute plans, notify other agents about events, compete for the user attention. Although many agents run simultaneously, there is a concept of the “focus agent” that drives communication with the user. Even without requests from the user, Assistant is active, it may generate notifications to the user, ask questions, suggest to initiate or continue micro conversations.

Multi-agent platform JASON directly supports implementation of manually encoded reactive and goal oriented behaviours (quite sophisticated). It can be also extended with more advanced approaches such as machine learning, simulation of emotions, ethical decision making, self modelling. My current interest is mostly in experimenting with various expert agents, coordination of activities and user experience, but I am looking forward to other topics.

- JASON: https://en.wikipedia.org/wiki/Jason_(multi-agent_systems_development_platform)

- AgentSpeak: https://en.wikipedia.org/wiki/AgentSpeak

- Belief–Desire–Intention software model: https://en.wikipedia.org/wiki/Belief–desire–intention_software_model